- Keyword-Driven Testing (KDT) is a battle-tested method to speed up test automation development and cut downscaling costs in the long run.

- This article gives you the best practices that we’ve crystallized from our 20+ years of helping clients successfully build and maintain their Keyword-Driven Testing frameworks.

- To reap the full reward of Keyword-Driven Testing, you should 1) organize tests into self-contained test modules with clear test objectives, 2) separate Business-Level from Interaction-Level tests, and 3) architect a robust keyword library.

- As Keyword-Driven Testing is a widely adopted method, you can apply these best practices to many test automation tools including Robot Framework, Unified Functional Test, TestArchitect, TestComplete, Ranorex, etc.

Why Keyword-Driven Testing?

Why is Keyword-Driven Testing such a big deal? That’s simply because it makes economic sense. We’ve observed many test teams successfully transformed their test project using KDT. The method indeed increased their team’s agility and saved them a lot of money in the long run compared to the early-days approaches.

Early-days Test Automation Approach

In the early days of UI test automation, test engineers automated tests by simply transcribing steps written in English to a programming language. Thus test code grew in direct proportion to the number of test cases. One test case, one corresponding (often longer) test script. As a result, test development cost spun out of control as the number of automated test cases spiked up.

Additionally, since test automation frameworks were often poorly architected, there was not much of code reuse. If someone needed to automate a new UI test, they had to write the bits and pieces of it from scratch. Consequently, test development speed suffered. Automation engineers struggled to deliver the automated tests of a software feature even after many sprints since the moment that feature achieved code-complete.

The Business Case of Keyword-Driven Testing

Now came the era of Keyword-Driven Testing.

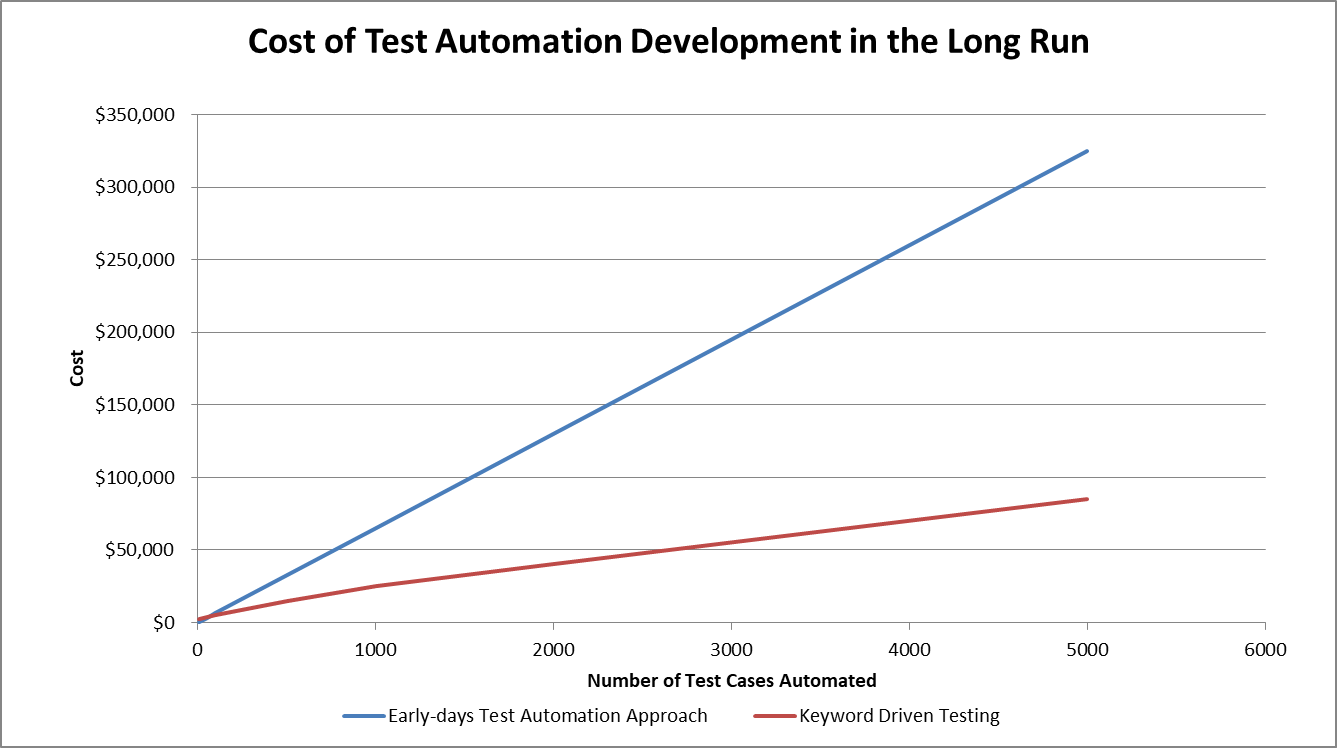

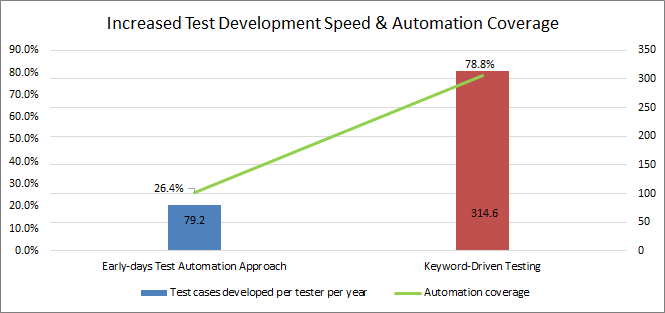

With KDT, you still have to write the test cases. But the number of code lines has been dramatically reduced thanks to the high reusability of keywords across test cases. The chart below shows how much money KDT could cut down in the long run compared to the early-days test automation approach.

Our own statistics also show that test teams applying KDT super-charged their test development speed from around 80 to over 300 automated test cases per tester per year. With such an amazing speed, these teams were able to close the gap between development and testing. Their automation coverage, therefore, jumped to almost 80%.

Although KDT is a widely adopted method, not all testers implement KDT the right way regardless of how long they have struggled with it. Thus it’s my great honor to write down the best practices that could possibly help you reap the full rewards of KDT below.

Three Keyword Driven Testing Best Practices You Can’t Afford to Miss

These best practices can be useful to both test teams that are just getting their feet wet in the KDT journey as well as provide a fresh perspective for veteran teams who are looking for ways to improve their long-standing KDT framework.

- Organize tests into self-contained Test Modules with clear Test Objectives

- Separate Business-level from Interaction-level tests

- Architect a robust keyword library

Let’s dive into each best practice below.

#1. Organize Tests into Self-Contained Test Modules with Clear Test Objectives

One of the core principles that Hans Buwalda, CTO of LogiGear frequently advocates in his workshops is that the key to successful automation is test design – a stark contrast to the common belief that treats automation purely as a technical challenge. Smart test teams should invest adequately in test design from the get-go.

High-level Test Design

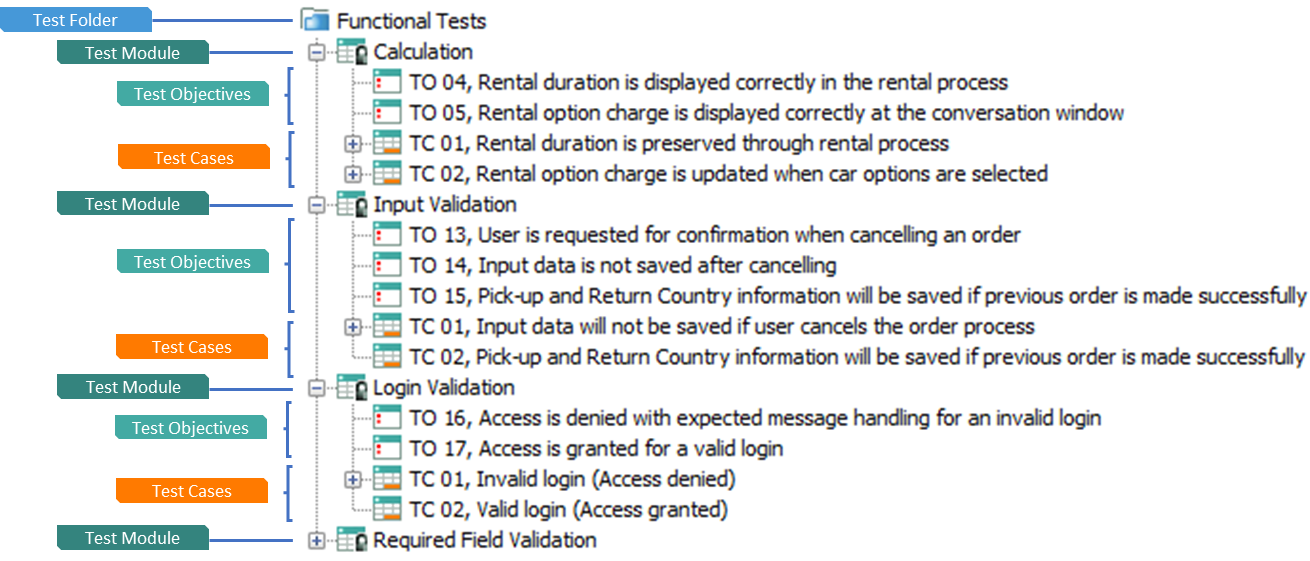

The process of test design starts with analyzing test requirements. Test teams should break down said test requirements into multiple test objectives. Afterward, test cases that share the same test objectives should be grouped into different self-contained test modules.

The project structure below illustrates how you could group test cases into test modules. Structuring your test project like this proves to be superior since it increases the maintainability and manageability of your KDT framework.

When choosing a KDT test tool, ensure that it enables you to organize tests in this fashion. Test objectives should be clearly documented and linked to test modules and test cases. It’s a plus if the tool also stores these test items as objects in a database so that you could create customized reports on test development progress and make the project’s health visible to all stakeholders.

Don’t worry if you haven’t covered all the test requirements from the start. With this high-level test design in place, you already got a strong foundation. Later on, you can come back and add more test cases and test modules if new testing ideas come to mind or new test requirements appear. It’s also not a problem to reorder or move the test cases around to improve the quality of test modules.

Low-level Test Design

Inside a test module, it’s a common practice to “chain” the test cases together. A test case could use the status that was left behind by the previous test case. This chaining technique helps you create a continuous test flow. It also saves you some time in test preparation and test cleanup. For instance, you don’t have to reload test data or log into the system per each test case.

On the other hand, you should keep test modules independent from each other in order to satisfy the “self-contained” criteria. If test modules depend on each other, you won’t be able to freely rearrange their order in the smoke or regression test suites. Thus you’ve accidentally made your life harder by adding an extra layer of complexity.

#2. Separate Business-Level from Interaction-Level Tests

Business Flows Analysis

To increase test coverage, we usually advise our clients to start analyzing test requirements using a methodical approach called the “Business Flows” technique. When trying to test a software system, find out what the Business Flows are and what Business Objects get involved in those Business Flows.

Business Objects are the items the system-under-test keeps data about. For instance, customers and cars in a car sales system are Business Objects. But don’t forget conceptual objects such as orders, invoices, account receivables, etc.

Most systems events going through multiple steps involve more than one Business Object. As a result, the data of those Business Objects in the database got updated. We call each of these processes a Business Flow.

For example, an internet user opens a browser, navigates to an e-commerce page, searches for a product, adds some personal information, places an order, pays by credit card, and then checks back on the order status. You should capture this entire “story” as a Business Flow. Or some may describe it as a user journey.

Organizing Tests Using Business

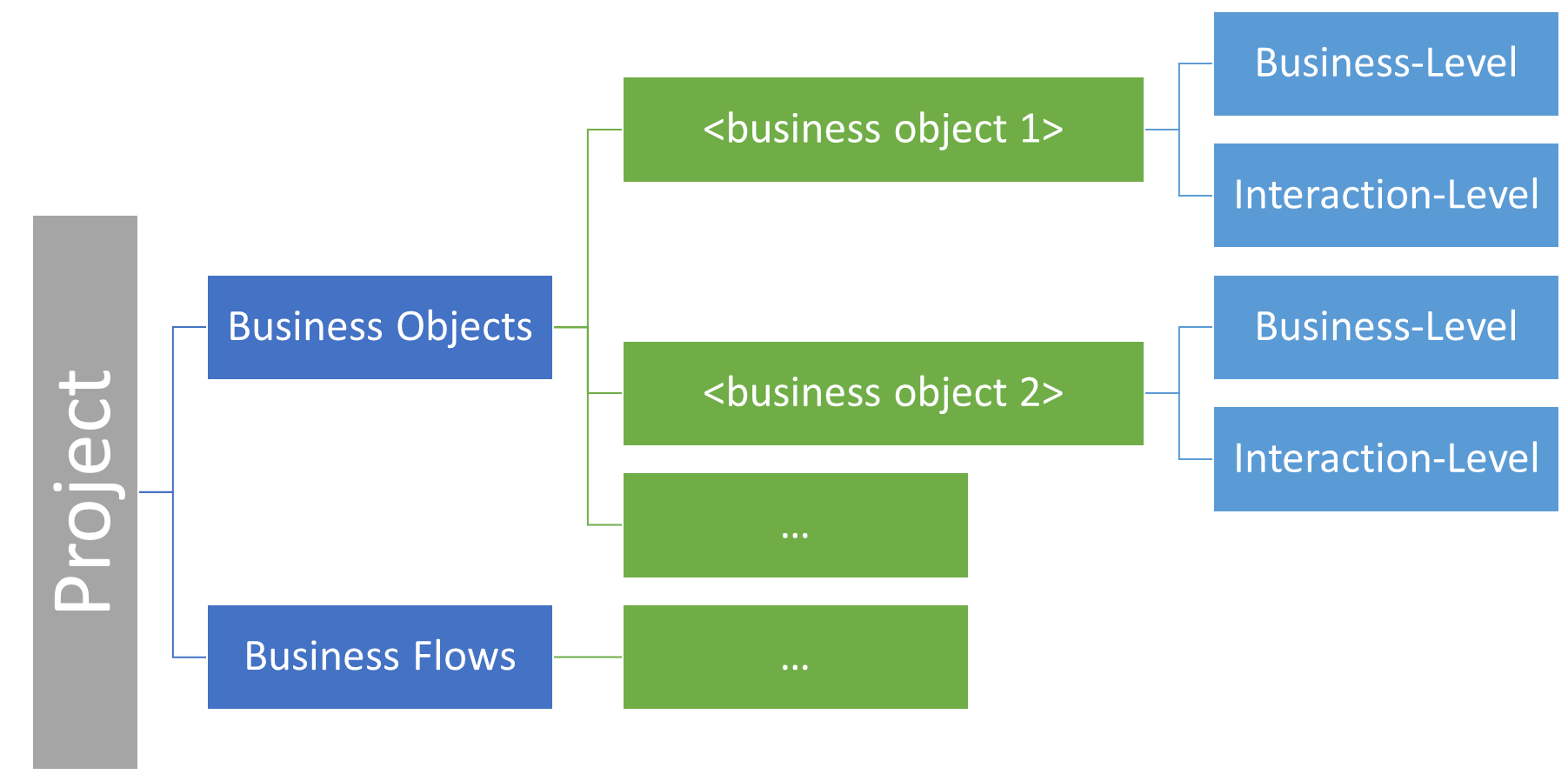

Based on the Business Flows analysis, you can group your test cases by Business Objects and Business Flows. Use the template below as a starting point for structuring your test project.

You may notice that under each Business Object, we further group test cases into 2 types: Business-Level and Interaction-Level.

- Business-Level Tests: these tests focus on the business operations performed on Business Objects. We usually use the abbreviation CRUD (Create, Read, Update, and Delete) to describe the lifecycle of Business Objects. However, besides those basic operations, you might want to perform other operations on Business Objects such as copy, move, categorize, enumerate, convert, serialize, import, export, etc. Testing those operations is also part of Business-Level Tests.

- Interaction-Level Tests: these tests focus on the UI interactions such as verifying whether the “Check Out” button exists, whether the system validates the “Email” text field correctly, or whether users are able to use the keyboard shortcuts (hotkeys), etc. Although they sound basic, these tests are necessary to identify the root cause of test failures.

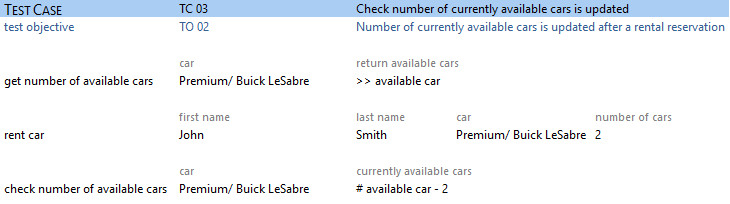

The pictures below illustrate the contrast between a Business-Level test and an Interaction-Level test.

When designing tests, try to separate out the Business-Level and Interaction-Level tests and put them in different buckets. We have seen too many test teams freaked out when all of a sudden, they received a test report full of errors and failed checks in the middle of the night simply because they had mixed Interaction-Level and Business-Level checkpoints into one lengthy test flow.

#3. Architect a Robust Keyword Library

The core of the test development activity is of course about writing the actual tests. When writing tests, there will be times when the keyword we’re looking for doesn’t exist yet. In such a case, there’s an easy fix – write a new one.

Building new keywords is an art in itself. I’ve collected some hard-learned lessons below to help you architect a robust keyword library for your KDT project.

Don’t Repeat Yourself (DRY)

Before creating a new keyword, verify that there’s absolutely no similar keyword that exists within your keyword library. Creating a duplicate keyword is a big mistake that can come back and bite you in the form of technical debt.

To avoid duplication, establish a naming convention, and persuade your team to stick to it. For instance, if you name the first keyword “check account balance”, don’t name the second keyword “verify car exists”. Although “check” and “verify” are semantically the same, having both words floating around creates unnecessary headaches.

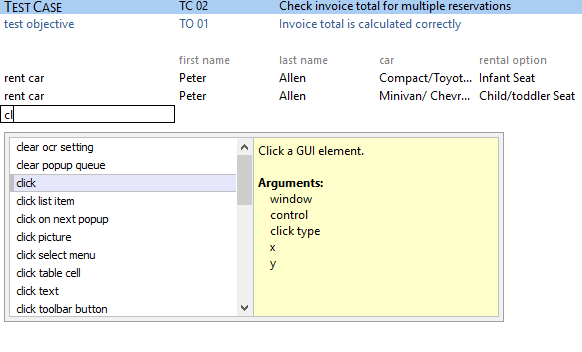

From a tooling perspective, choose a test tool that has the auto-complete function that searches the whole keyword library (both built-in and user-scripted keywords) and suggests the best keyword match as you type. Such a function is very useful to find the keywords you need faster as well as avoid creating duplicate keywords.

For instance, the picture below shows an autocomplete menu popped up when you type in a keyword name in a test case (screenshot taken from the TestArchitect IDE).

It’s also a bonus if the auto-complete menu contains useful information such as the keyword’s description and the arguments needed. After you pick a keyword, the auto-complete function should also automatically populate the required fields such as argument names and argument default values. This function certainly saves you some time and speeds up your test development.

Atomic Keywords

When you indeed have to create a brand new keyword, choose a clear keyword name and descriptive arguments for your keyword. Most importantly, each keyword should have only one clear function. If a keyword cannot be further broken down into smaller keywords, we call that keyword “atomic”.

On the other hand, if one keyword performs multiple unrelated functions, you’ll soon find yourselves violating the DRY rule above: duplicating a keyword to create your own flavor since the original keyword-only satisfies 70% of your requirements. If such a situation arises, spend a little more time splitting the big keyword into two or more “atomic” keywords.

Atomic keywords offer higher reusability. And reusability is the key success factor that boosts your test development speed. Once you finish constructing a strong foundation of “atomic” keywords, it becomes easier to build complex Business-Level keywords later on (see the section below).

Begin with Scalability in Mind

For larger projects, we often advise our clients to divide the keyword library into three levels called “Low-Level”, “Middle-Level”, and “High-Level” keywords. Having different levels of abstraction is key in scaling up a KDT project.

- High-Level Keywords are the business-oriented keywords, like “create a customer” or “rent car”. They hide UI interaction details like which screens to go through and which buttons to click.

- Low-Level Keywords are related to the GUI (or even CLI or API) of the application. These low-level keywords are used to create Interaction-Level tests (described in Best Practice #2).

- Middle-Level Keywords deal with navigation details and data entry that can be shared among high-level keywords for easier maintenance. For instance, the “enter tax form” keyword can combine multiple middle-level keywords such as “enter personal information”, “enter dependents”, “enter preparer information”, etc.

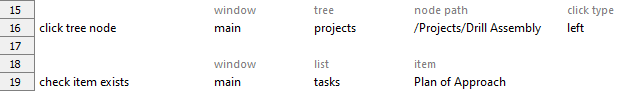

If at all possible, choose a test automation tool that allows you to combine low-level keywords to form middle-level and high-level keywords without too much coding. The picture below shows two low-level keywords.

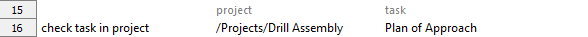

You can combine these two low-level keywords (“check tree item” and “checklist item exists”) to form one higher-level keyword named “check task in the project”. Then replace these two keyword calls by only one keyword call to “check task in the project” in the test case.

Higher-level keywords increase reusability. Additionally, they can be well understood by the business, domain experts, or even IT and SecOps specialists. Thus they are often used as an effective communication device among different roles inside and outside a team.

As you now have many levels of abstraction, the exact abstraction level of the keywords used in a test module should follow the scope of that module. In particular, low-level keywords like “click” and “enter”, should be used in Interaction-Level test modules.

On the other hand, high-level keywords, like “enter customer” and “check account balance” are better suited for Business-Level test modules as well as End2End acceptance testing.

Low-level Keywords Should Be Platform-Agnostic

Your test automation tool should abstract away the technical minutiae of the platform under test. For instance, it’d be very tiring to maintain separate platform-dependent versions of one keyword such as “click button on windows”, “click the button on macOS”, “click the button using Selenium”, “click the button on Android”, “click the button on iOS” and so on.

Therefore, the ideal tool should provide platform-agnostic low-level keywords. These keywords should not depend on a particular application or platform. Keywords like “click”, “select the menu item” or “send HTTP request” should run smoothly across as many applications and platforms as possible.

A robust keyword library is the most important factor for the success of a KDT project. You can expect the ROI of thoughtful keyword design as high as 10x. If there’s only one thing you could not afford not knowing in KDT, it’s the art of architecting a robust keyword library.

Conclusion

Keyword-Driven Testing is powerful. However, implementing an efficient KDT framework is by no means an easy job. Architecting and implementing a framework requires extensive system thinking, design thinking, and technical proficiency. It’s also an iterative process of continuous improvements over time.

If you stick to the three best practices I listed above, test automation with KDT will become a pleasant journey as it’s supposed to be.

Tip: If you love Keyword-Driven Testing as a test method, consider adopting one of the test tools that embody the tenets of KDT. Action-Based Testing is the modern evolution of Keyword-Driven Testing. Check out this method and its accompanying test automation tool (TestArchitect).

Great stuff!